Winning the SEO Game with Data Science Insights

Introduction

In an era where Artificial Intelligence (AI) and Machine Learning (ML) are revolutionizing various industries, Search Engine Optimization (SEO) is no exception. As presented by Lukáš Kostka at the Digital4Sofia conference in Bulgaria, the integration of data science into SEO strategies can lead to impressive results. This article explores how leveraging data science techniques can provide valuable insights to optimize content for search engines.

For those interested in trying out the Streamlit app with the functions mentioned in this article, we are planning to release the app in the coming weeks. If you would like to access the app when it becomes available, please fill out our survey. We will notify you once the app is released – https://forms.gle/gFYfFHmjS245ns1S7

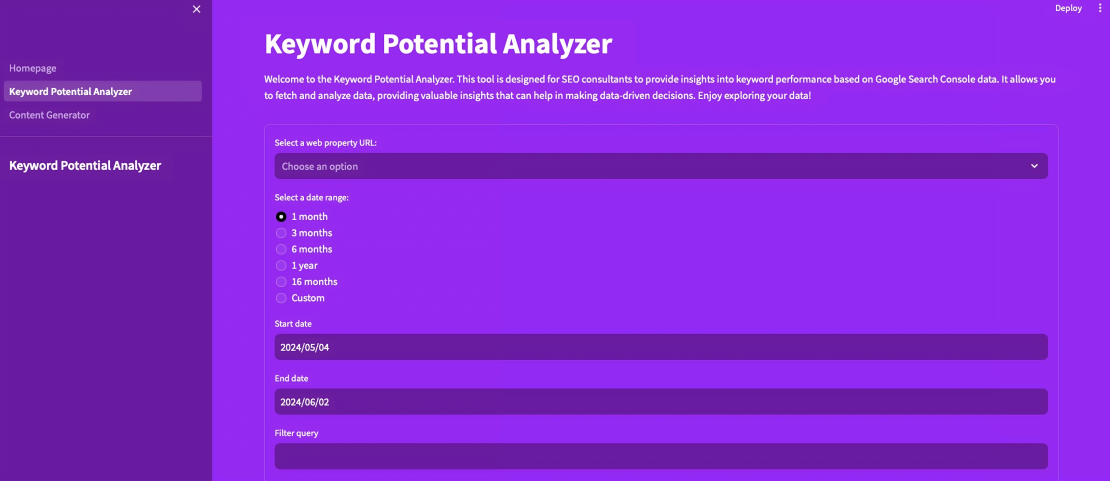

Keyword Potential Analyzer

One of the many components of a data-driven SEO strategy in Effectix is the Keyword Potential Analyzer. This is not the only tool that we're using and should be considered as an example of how machine learning can be applied in SEO. There are a wide range of possibilities and this serves as an inspiration. This tool combines the power of Google Search Console or Google BigQuery to provide a comprehensive analysis of keyword performance. It's worth noting that people can use either the Google Search Console API or BigQuery if they back up their Google Search Console data there, which provides more data than just using the API. By fetching and analyzing data from these sources, the Keyword Potential Analyzer offers valuable insights into keyword rankings, impressions, clicks, and more.

The workflow of the Keyword Potential Analyzer involves several steps that build upon each other. It starts with data collection, where data is retrieved from Google Search Console. The collected data then undergoes preprocessing to ensure its quality and consistency. This step involves cleaning the data, handling missing values, and transforming it into a suitable format for analysis.

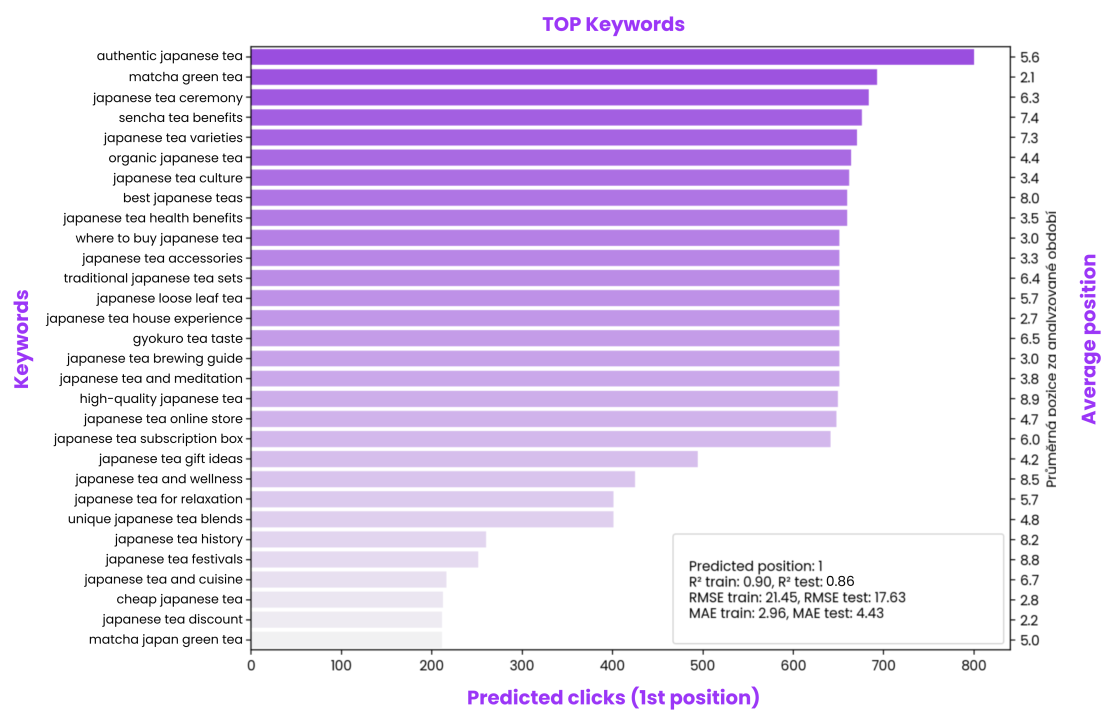

Next, machine learning algorithms, such as the Random Forest Regressor, can be applied to predict keyword potential and identify high-performing keywords. In this case, we have chosen to use the Random Forest Regressor. However, it's important to note that different tasks may require different models, and what works well for one task may not necessarily be the best choice for another. Experimenting with multiple models allows for a comprehensive evaluation and selection of the best-performing one for each specific task. The Random Forest Regressor considers various features of the keyword data, including impressions, clicks, and rankings, to determine the potential of each keyword.

After building the model and predicting potential clicks, it's worth mentioning that we can use the model to predict clicks for specific positions in the search engine results page (SERP), such as the first position. This allows SEO professionals to set target positions for their keywords and estimate the potential traffic they can achieve by ranking higher.

The model's performance can be evaluated using metrics like R-squared (R²), Root Mean Squared Error (RMSE), and Mean Absolute Error (MAE). These metrics provide a quantitative measure of how well the model fits the data and how accurate its predictions are. It's important to divide the dataset into two parts – training and testing. The model is trained on the training data and then tested on the unseen testing data. This approach allows for a more reliable assessment of the model's performance on new, unseen data. If we don't split the data and only analyze the model's performance on the testing data, it can lead to overfitting. Overfitting occurs when the model represents the tested values very well but fails to generalize and perform accurately on new data. By assessing the model's performance on both training and testing data, SEO professionals can fine-tune their strategies and make informed decisions based on the insights derived from the Keyword Potential Analyzer while minimizing the risk of overfitting.

Data visualization techniques are then employed to create informative and visually appealing charts and graphs. Libraries like Seaborn can be utilized to generate insightful visualizations that help in understanding the patterns and trends in the keyword data. These visualizations provide a clear picture of the keyword performance and aid in identifying areas for improvement.

Once the high-potential keywords are identified, the next step is to optimize the content accordingly. This is where automated content creation comes into play. By leveraging the insights gained from the Keyword Potential Analyzer, SEO professionals can generate content that is tailored to the identified keywords. Automated content creation techniques can be employed to produce articles, meta descriptions, and other content elements that are optimized for both search engines and readers.

Content Generation

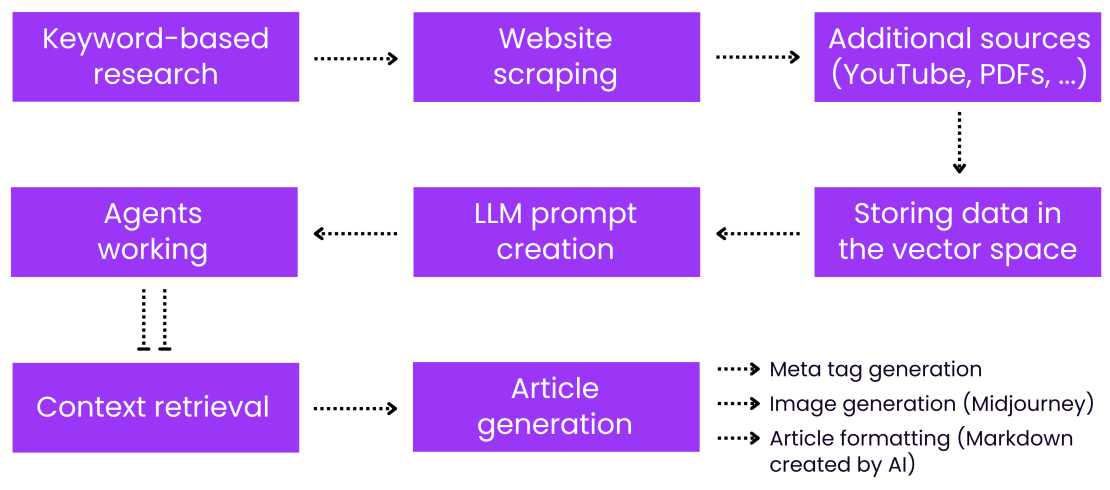

Another crucial aspect of SEO is the creation of high-quality, engaging content. With the advancements in AI and natural language processing (NLP), generating SEO-optimized articles has become more streamlined and effective. The article generating process involves several tools and techniques that work together seamlessly.

SerpApi is an API that enables the scraping of search engine results pages (SERPs). However, it's important to note that there are other services available for scraping Google search results. By leveraging SerpApi or similar tools, SEO professionals can gather valuable data on keyword rankings, featured snippets, and related searches. This data serves as a foundation for generating content that is relevant and optimized for search engines.

DeepL, a powerful neural machine translation service, plays a significant role in multilingual content generation. It allows the translation of content into multiple languages while maintaining high quality and accuracy. However, it's worth mentioning that Google Translate can also be used for this purpose. This is particularly useful when targeting audiences in different countries or language regions. For example, if an SEO professional is writing about Japanese tea, they can leverage the insights from the Keyword Potential Analyzer, which may have identified several relevant keywords related to Japanese green tea. To gather more context and information, they can translate these keywords using DeepL or Google Translate and then scrape the search results from Japanese search engines. This approach enables them to obtain valuable information and insights directly from Japanese sources, enriching their content with authentic and culturally relevant details.

BeautifulSoup, a Python library for parsing HTML and XML documents, is another essential tool in the content generation process. It facilitates web scraping and data extraction from various sources. By using BeautifulSoup, SEO professionals can extract relevant information, such as article titles, meta descriptions, and content snippets, from top-ranking pages. This extracted data serves as inspiration and reference for generating SEO-optimized content. However, it's important to note that BeautifulSoup may have limitations when it comes to scraping websites that heavily rely on JavaScript for rendering content. In such cases, using a different Python library like Selenium or Puppeteer can be more effective. These libraries provide a way to interact with web pages, execute JavaScript, and extract dynamically generated content, ensuring a more comprehensive scraping process.

The content generation process typically begins with keyword research and analysis. The identified keywords are used to search for relevant content using tools like SerpApi and BeautifulSoup. The scraped data is then preprocessed to remove any unnecessary elements and noise. This preprocessing step ensures that the extracted content is clean and focused on the relevant information.

In addition to scraping search results, it's also possible to incorporate other sources of contextual information into the content generation process. For example, transcripts or captions from relevant YouTube videos can be included to provide additional insights and perspectives on the topic. Additionally, proprietary documents or internal knowledge bases containing relevant information can be leveraged to enrich the generated content. By combining data from various sources, the content generation process becomes more comprehensive and well-rounded.

Retrieval-Augmented Generation (RAG)

Retrieval-Augmented Generation (RAG) is a cutting-edge technique that combines retrieval-based and generation-based approaches to produce high-quality, informative content. It leverages the power of large language models (LLMs) and vector databases to generate content that is both relevant and coherent.

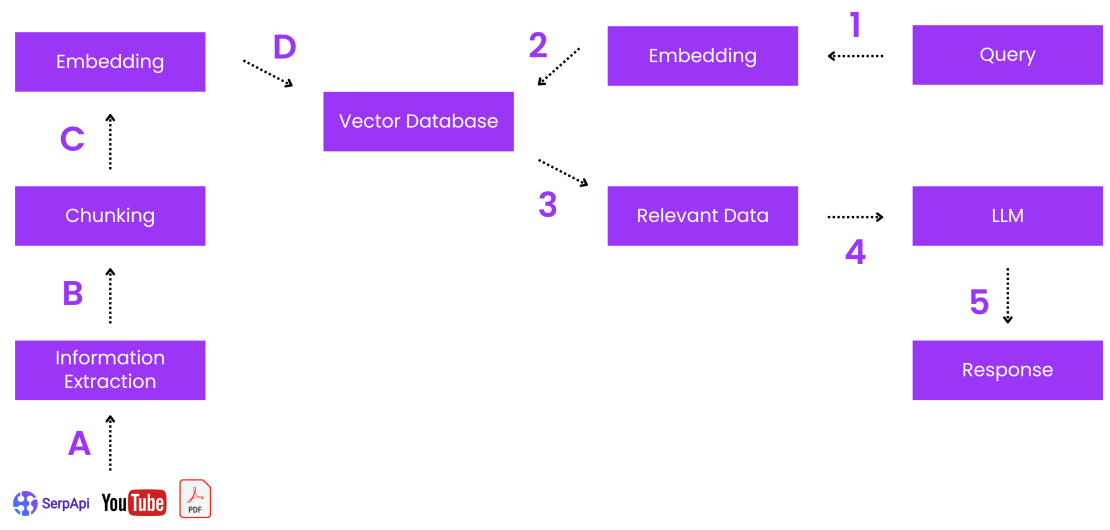

The RAG process begins with embedding the text data into numerical vectors using techniques like word embeddings or sentence embeddings. These embeddings capture the semantic meaning of the words and sentences, allowing for efficient similarity searches. The embedded text is then chunked into smaller, manageable pieces to facilitate efficient processing and retrieval.

Next, the embeddings are stored in a vector database, which enables fast and efficient similarity searches. When a user queries the system, the relevant chunks of information are retrieved from the vector database based on their similarity to the query. This retrieval process ensures that the generated content is contextually relevant and aligned with the user's intent.

The retrieved information is then fed into a large language model, such as Claude Opus or GPT-4. These LLMs have been pre-trained on vast amounts of text data and possess a deep understanding of language and context. They take the retrieved information as input and generate human-like responses that are coherent and informative.

By combining the strengths of retrieval-based and generation-based approaches, RAG offers a powerful approach to content generation. The retrieval component ensures that the generated content is grounded in relevant information, while the generation component allows for the creation of fluent and natural-sounding text. This combination results in highly informative and engaging articles that are optimized for both search engines and readers.

It's worth mentioning that we are utilizing the LlamaIndex framework to help build the RAG system. LlamaIndex provides a set of tools and functionalities that facilitate the implementation of RAG, making it easier to create a robust and efficient content generation pipeline. We will discuss the specifics of LlamaIndex and how it aids in the development of RAG in the upcoming section.

LlamaIndex and Agents

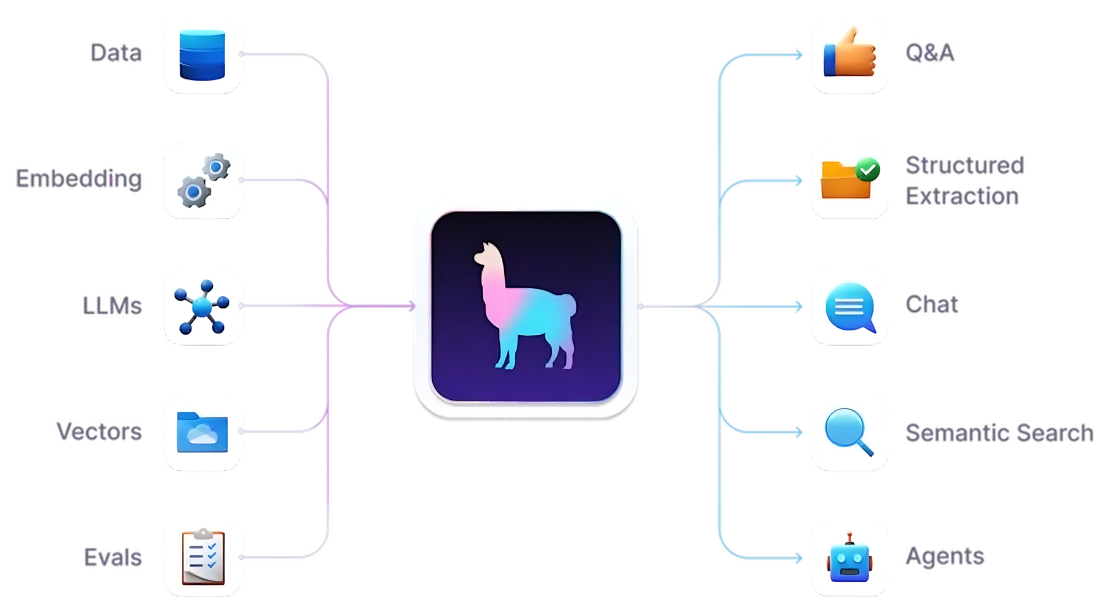

LlamaIndex is a framework that simplifies the process of building intelligent systems for SEO purposes. It provides a set of tools and functionalities that facilitate data retrieval, analysis, and agent creation. LlamaIndex offers several key features that make it a valuable asset in the SEO workflow.

One of the core features of LlamaIndex is data embedding. It provides built-in support for converting text data into vector representations, enabling efficient similarity search and retrieval. By embedding the data, LlamaIndex allows for quick and accurate identification of relevant information based on the user's query.

LlamaIndex seamlessly integrates with large language models (LLMs), allowing for the generation of human-like responses based on the retrieved information. This integration enables SEO professionals to leverage the power of LLMs to generate high-quality content that is both informative and engaging.

Another significant feature of LlamaIndex is semantic search. It enables the retrieval of relevant information based on the meaning and context of the query, rather than just keyword matching. This semantic search capability enhances the accuracy and relevance of the retrieved information, leading to more targeted and effective content generation.

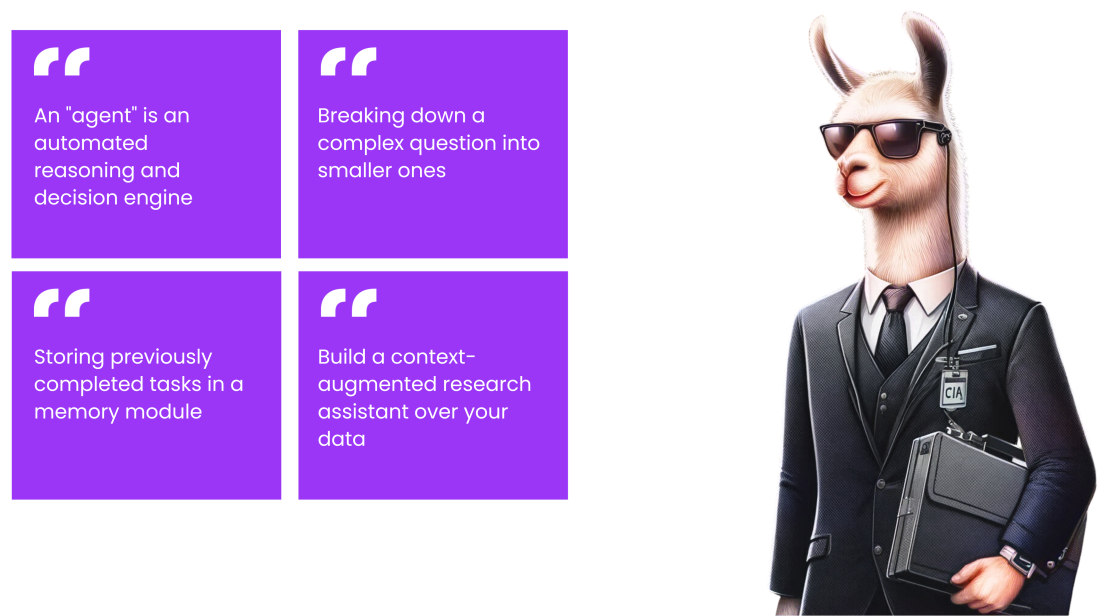

LlamaIndex also provides a framework for building agents that can perform complex tasks and leverage multiple tools and data sources. These agents can be customized to automate various aspects of the SEO process, from data retrieval and analysis to content generation and optimization.

By leveraging the capabilities of LlamaIndex and its agents, SEO professionals can automate various aspects of the SEO process, from data retrieval and analysis to content generation and optimization. This automation streamlines the workflow and enables SEO professionals to focus on high-level strategic tasks while the agents handle the more repetitive and time-consuming tasks.

It's crucial to use a proper model in the RAG system to ensure high-quality content generation. In our implementation, we are utilizing Claude 3 Opus, which has demonstrated outstanding results in the Czech language. However, it's important to have a system that is not limited to just one model. If advancements or changes occur in the field of language models, we can quickly adjust our code to accommodate the new model. This flexibility allows us to stay up-to-date with the latest developments and ensures that our RAG system remains adaptable and effective in generating high-quality content.

Claude-3 by Anthropic

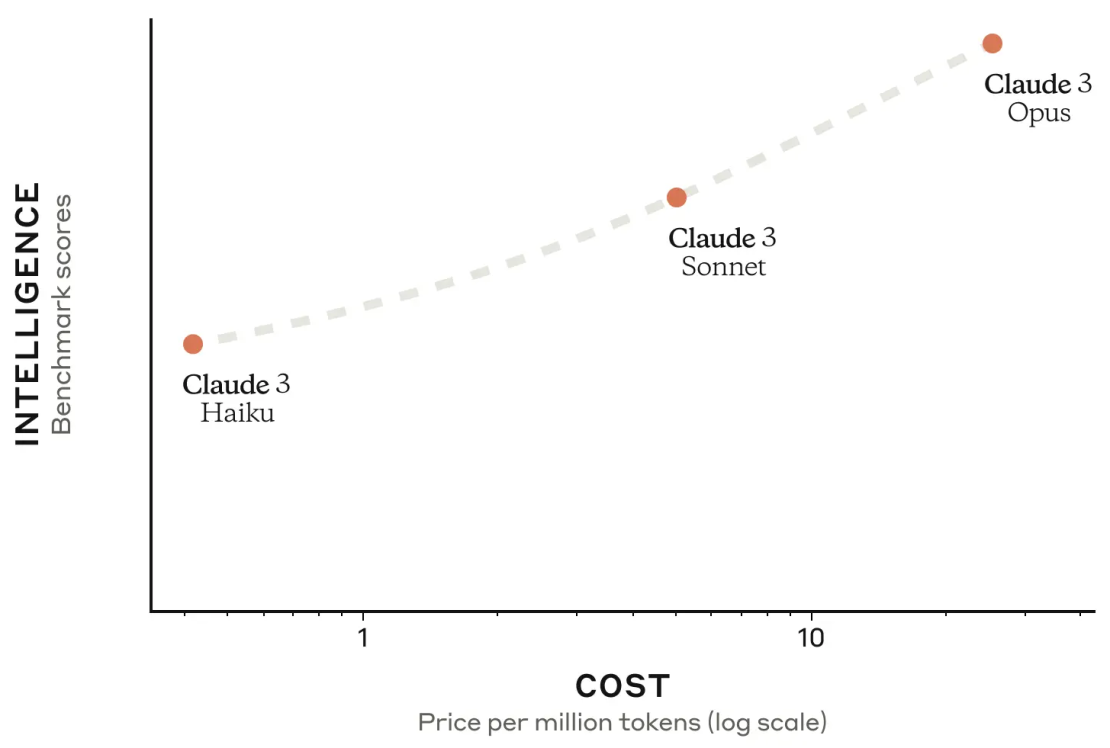

Anthropic's Claude-3 is a family of language models designed to cater to different levels of complexity and cost. These models offer a range of capabilities and performance levels, allowing SEO professionals to choose the most suitable model for their specific requirements and budget.

The Claude-3 models include three variants: Claude 3 Haiku, Claude 3 Sonnet, and Claude 3 Opus. Claude 3 Haiku is a cost-efficient model that is suitable for simpler tasks and quick iterations. It provides a balance between performance and affordability, making it ideal for small-scale SEO projects or rapid prototyping.

Claude 3 Sonnet, on the other hand, offers a balanced approach. It provides a good trade-off between cost and intelligence, making it suitable for most SEO tasks. This model can handle more complex queries and generate high-quality content while still being cost-effective.

For SEO professionals seeking exceptional results, Claude 3 Opus is the top-tier model. It delivers outstanding performance and is capable of handling highly complex and nuanced tasks. Claude 3 Opus is particularly well-suited for generating content in languages like Czech, where it significantly outperforms other models, including GPT-4.

By selecting the appropriate Claude-3 model based on the specific requirements and budget of the SEO project, professionals can optimize their resources and achieve remarkable results. The Claude-3 models can be integrated into the SEO workflow to generate high-quality content, optimize meta tags, and provide intelligent recommendations. However, crafting effective prompts is essential for generating high-quality and relevant content.

Crafting Effective Prompts

One of the key aspects of utilizing AI-powered tools like LlamaIndex and Claude-3 is crafting effective prompts. Prompts are the instructions or queries provided to the AI system to guide its behavior and output.

When constructing prompts, it's essential to consider several factors. Firstly, the prompt should clearly define the desired output format. While articles are a common output format, it's important to note that the framework can be adjusted to generate various types of content, such as category descriptions, product descriptions, or even social media posts. This flexibility allows SEO professionals to leverage the power of AI across different aspects of their content strategy. Clearly specifying the desired output format in the prompt helps the AI system understand the expected structure and style of the generated content.

Secondly, the prompt should include relevant context and background information about the topic. This can include the target audience, the purpose of the content, and any specific guidelines or constraints. Providing sufficient context enables the AI system to generate content that is tailored to the intended audience and aligned with the overall objectives.

Thirdly, the prompt should incorporate the identified keywords and phrases that are relevant to the topic. These keywords serve as anchors for the AI system to focus on while generating the content. However, it's important to strike a balance and avoid overusing or stuffing keywords, as it can lead to unnatural and spammy content.

Fourthly, the prompt should follow a structured approach. For article generation, you can use frameworks such as AIDA (Attention, Interest, Desire, Action). This framework helps in crafting compelling and persuasive content by capturing the reader's attention, generating interest, creating desire, and ultimately encouraging action. By incorporating the AIDA principles into the prompt, the generated content becomes more engaging and effective in achieving its intended purpose.

Lastly, it's crucial to provide clear instructions on the desired tone, style, and formatting of the content. This can include specifying the language, the level of formality, the use of bullet points or numbered lists, and any other specific requirements. By providing these guidelines, the AI system can generate content that aligns with the brand's voice and style guidelines.

To facilitate the creation of well-structured and visually appealing content, you can ask the language model to generate the output in Markdown format. Markdown is a lightweight markup language that allows for easy formatting of text, headings, lists, links, and more. By requesting the generated content in Markdown, you can easily convert it into HTML or other formats, making it ready for publishing on websites or blogs. This approach streamlines the content creation process and ensures that the output is well-organized and visually engaging.

By crafting prompts that follow this structure and include the necessary elements, SEO professionals can leverage the power of AI to generate high-quality, SEO-optimized content efficiently.

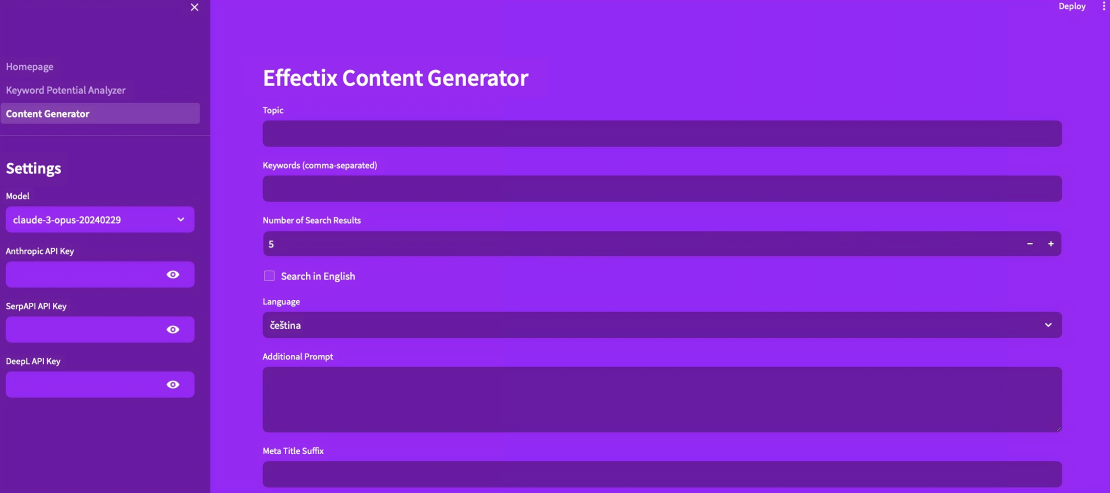

Streamlit App

The data science tools and techniques discussed in this article – the Keyword Potential Analyzer and Content Generator – can be seamlessly integrated into a user-friendly Streamlit application. Streamlit is a powerful framework that allows for the creation of interactive web applications using Python. While Jupyter notebooks for Python or RMD files for R have been widely used in the past, they can be tricky and time-consuming for a large team in a digital marketing agency. Different team members may encounter various errors and issues, leading to a lot of time spent on troubleshooting. In contrast, having a centralized Streamlit app simplifies the process and ensures a consistent user experience for everyone in the team.

By leveraging Streamlit, SEO professionals can build a custom application that encapsulates the entire SEO workflow, from data retrieval and analysis to content generation and optimization. The application can provide an intuitive interface for users to input their preferences, such as target keywords, desired SERP positions, and content generation parameters.

The Streamlit application can incorporate the various components discussed in this article, such as the Keyword Potential Analyzer, content generation tools (SerpApi, DeepL, BeautifulSoup), and LlamaIndex agents. Users can interact with the application to fetch and analyze data, generate SEO-optimized content, and receive recommendations based on the insights derived from the data science tools.

The application can be structured to create a user-friendly interface for selecting web properties, specifying date ranges, and filtering data. It can display informative visualizations, such as heatmaps and pair plots, to provide a clear understanding of keyword performance and correlations.

By packaging the data science tools and techniques into a Streamlit application, SEO professionals can make their workflow more accessible and efficient. The application can serve as a centralized platform for managing SEO strategies.

Conclusion

In conclusion, the integration of data science into SEO is not just a trend but a necessity for success in the modern digital landscape. By embracing the insights and techniques discussed in this article, SEO professionals can embark on a transformative journey that will elevate their strategies, drive meaningful results, and position them at the forefront of the industry. This article aims to inspire readers to explore the possibilities of developing their own data science applications tailored to their specific SEO needs. By leveraging the power of tools like Streamlit, SEO professionals can create customized solutions that address their unique challenges and optimize their workflows. The potential for innovation and growth in the field of SEO through data science is immense, and we encourage readers to take the first step in this exciting direction.

SHARE THE ARTICLE

TAGS

Do you want to know more about the improvement of the content? Contact me.